AudienceData

Adserver

Best Practices

Do's and dont's when using AudienceData

How to document the accuracy?

Why introduce segments with different affinities?

Using targeting with the right conditioning

DSP

How to access data AudienceData segments in Adform

How to find AudienceData in MediaMath DMP

How to find segments in BidTheatre

How to find segments in Display & Video 360

Data Introduction

Available Segments

Existing integrations

Methodology and precision

The distinction between campaign impression profile and impressions in target group

What is deterministic data?

What is probabilistic data?

Publisher

Accessing targeted data with DFP Audience sync

AdForm publisher integration instructions

How to find data usage information in Google 360 for Publishers former DFP

How to report on AudienceProject segment usage in DFP

Inventory available for realtime targeting in DFP

Lotame integration

Sending targeting key values to AdManager

Troubleshooting

AudienceHub

How to create your first audience

How to create your first seed

Case 1: Selecting a customer file

Case 2: Selecting an Amazon S3 file

Case 3: Selecting survey data from UserReport

Creating a seed

Insights reports

What is AudienceHub?

The new generation of AudienceReport

API Documentation

API Best Practices

How do I use the AudienceReport Next API?

Understanding the API documentation

What is an API?

Where do I find the API key?

Account Management

Account Types

Agencies: managing user access for connected accounts

How to add new clients

How to connect an Agency account to a Premium client account and vice versa

How to disconnect accounts

How to manage user accounts

User roles

What is the 2-step verification and how does it work?

Integrations

Adform

Amazon Ads

CTV and Addressable TV

Campaign Manager

DV360

Facebook/Meta

Semi-Automated integrations

TechEdge Integration

YouTube

Measurement Methodology

Pixel Implementation

Getting Started with Pixels

How do URL-Parameters work?

How to add parameters to AudienceReport pixel

How to check if your pixel is firing?

How to create a pixel?

SSL - Compliance

The GDPR parameters

What is a CACHE-Buster and why do we need it?

What is a tracking pixel?

What is the purpose of a t-code?

Setting up Pixels

Pricing

Reports

Creating and Sharing reports

How to add and export tracking pixels to your reporting items

How to add custom report items

How to duplicate a report

How to export your report

How to share your report with your client

How to understand your report

How to understand your report - Dashboard

How to understand your report - Delivery

How to understand your report - Funnel

How to understand your report - Profile

How to understand your report - Reach

How to use an exported pixel

Getting Started with Reports

The original AudienceReport

Addressable TV

Activating Addressable TV measurement

Available Addressable TV device types

How Addressable TV is measured

How to get the Addressable TV measurement tool in AudienceReport

Impact on sample size and frequency

Sharing Addressable TV measurement numbers with TechEdge

What is Addressable TV?

Adserver Implementation

Ad Tech

Adserver - Adform

Adserver - VideoPlaza

Atlas

Double Click DCM Adserver

Emediate

Extented Sizmek Asia-guide

How to implement creative wrapper in Ad Manager

Programmatic Publisher Ad Server - Adform PPAS

Setting-up video measurement in Google Ad Manager

Sizmek Ad Suite Tracking

Sizmek/MediaMind guide

Tracking using JavaScript

Implementing AudienceReport tracking pixels in Webspectators

Brand Lift Studies

Cache-buster

Is my cache-buster working?

What is a cache-buster?

Which cache buster shall I use for my ad server?

Why do we need a cache-buster?

Creating Projects

Adding tracking points / pixels to your project

Applying filters to your data

Change your target group or report period

Creating your first project

Duplicating campaigns

How to merge projects

How your data will change when applying filters

Custom Segments

Activating your Customer Segments 3.0

Available Custom Segments

Custom Segments 3.0

Custom Segments and Sample Size

Reach, Coverage and Segments Availability

What are Custom Segments?

Event Tracking

Adding tracking points / pixels with event tracking to your project

Event tracking in various adservers

Implementing click trackers

In-app tracking

In-view tracking of inlined content

Understanding Event Tracking

What is Event Tracking?

Integrated Report

Connect your Facebook Business Manager account to AudienceReport

Connect your Google Ads account to AudienceReport

Connect your Google Display & Video 360 account to AudienceReport

How are data integrated?

How to create an Integrated Report

To-do list before creating an Integrated Report

Understanding your Integrated Report

What is an Integrated Report?

Integrations

Adform integration set-up

Automatic tracking of DFP campaigns

Google Campaign Manager Integration

Integrate AudienceReport and Techedge AxM (AdvantEdge Cross Media)

Intercept

Pixel implementation

Quality

How Transparency is measured

How Viewability is measured

How the Overall Quality Score is calculated

Viewability tracking using AudienceReport measurement script

What is Quality?

What is a good Quality score?

What is a hidden referrer or a generic referrer?

What is the difference between no referrer and other referrers (in the tracking point violations table)?

When is a tracking point considered to be violating Geo Compliance/Transparency/Viewability?

Why can’t I drill down on some countries to see in which regions my impressions are loaded?

Why is my overall score not that bad when almost all my impressions are of low quality?

Why is there a discrepancy between the impression count in the Quality tab and the rest of the report while my campaign is running?

Will my viewability score of 0.0 affect the overall Quality score if I didn’t implement in-view tracking?

Reports

Customized PDF reports

Deeper Insights with Campaign Audience Measurement

Exporting your report

How to search for your project

Introducing the common affinity profile

Managing your projects with labels

Sample sizes

Tired of clicks and imps?

Understanding your project

Technical Questions

Account Administration

Ad blocking

Can I change the phone number I chose for the two-step verification process?

Checking SSL-Compliance

General Troubleshooting

Getting started with AudienceReport API

How do URL-parameters work?

How often will I be asked to log in through the two-step verification process?

How to track traffic by device type

If you accidentally delete pixels from your project

The procedure to enable the two-step verification

What if I lose my phone and cannot access my account?

Tracking Pixels

Upgrade to the new generation of AudienceReport

AudienceReport Upgrade FAQ

Comparing the original and the new generation of AudienceReport

How to import data from the original AudienceReport

UserReport

Installing UserReport and setting up your media sections

Defining your website in the media section

General Account Information

Installing UserReport on your website or app

Adding own user ID to UserReport

Configure Google Tag Manager to resolve media dynamically

Configuring media/section through snippet

Install UserReport script with Google Tag Manager

Installing UserReport SDK to Android Application

Installing UserReport SDK to iOS application

Installing UserReport script on your website

Kits

General Information

Reach and Coverage of Custom Segments

Target Audience verified by Kits

The technology behind Kits

What are Kits?

Getting started

Troubleshooting

Working with Kits

The feedback widget

Activate the Feedback widget

Adding context information to ideas and bugs

Customize Feedback widget buttons and links

Customize color, text and position of the Feedback widget

Disabling the Feedback widget on specific devices

Get direct link to the Feedback widget or manually display it

How to activate your Feedback widget

How to change the status of an idea or add a comment

How to disable the "report a bug" feature

Is the Feedback Forum available in my language?

Pre-populating username and email

What is the Feedback widget?

The feedback report

The survey widget

Activate Email Collect

Activate Net Promoter Score ®

Activate the Survey widget and create your questions

Chained questions and how they work

Controlling invitation frequency when using UserReport script and Google Tag Manager

How many questions can be added?

How many surveys answers do I need?

How to add questions to your survey

How to customise you survey widget

How to deactivate and delete your survey questions

How to show or hide results from users

Is UserReport 100% free?

Is the UserReport survey widget available in my language?

Managing invitation rules through Ad-server

Preview your survey

Respecting the user

User invitation to UserReport survey and the quarantine system

Who pays for the prizes in the survey?

Will UserReport slow down my website? Is it affected by popup blockers?

The Google Analytics Integration

The survey reports

Accessing Newsletter signups using API

- All Categories

- The new generation of AudienceReport

- Measurement Methodology

- Open web measurement

Open web measurement

Updated

by Corina Alonso

Open web measurement: Audience measurement of open web campaigns

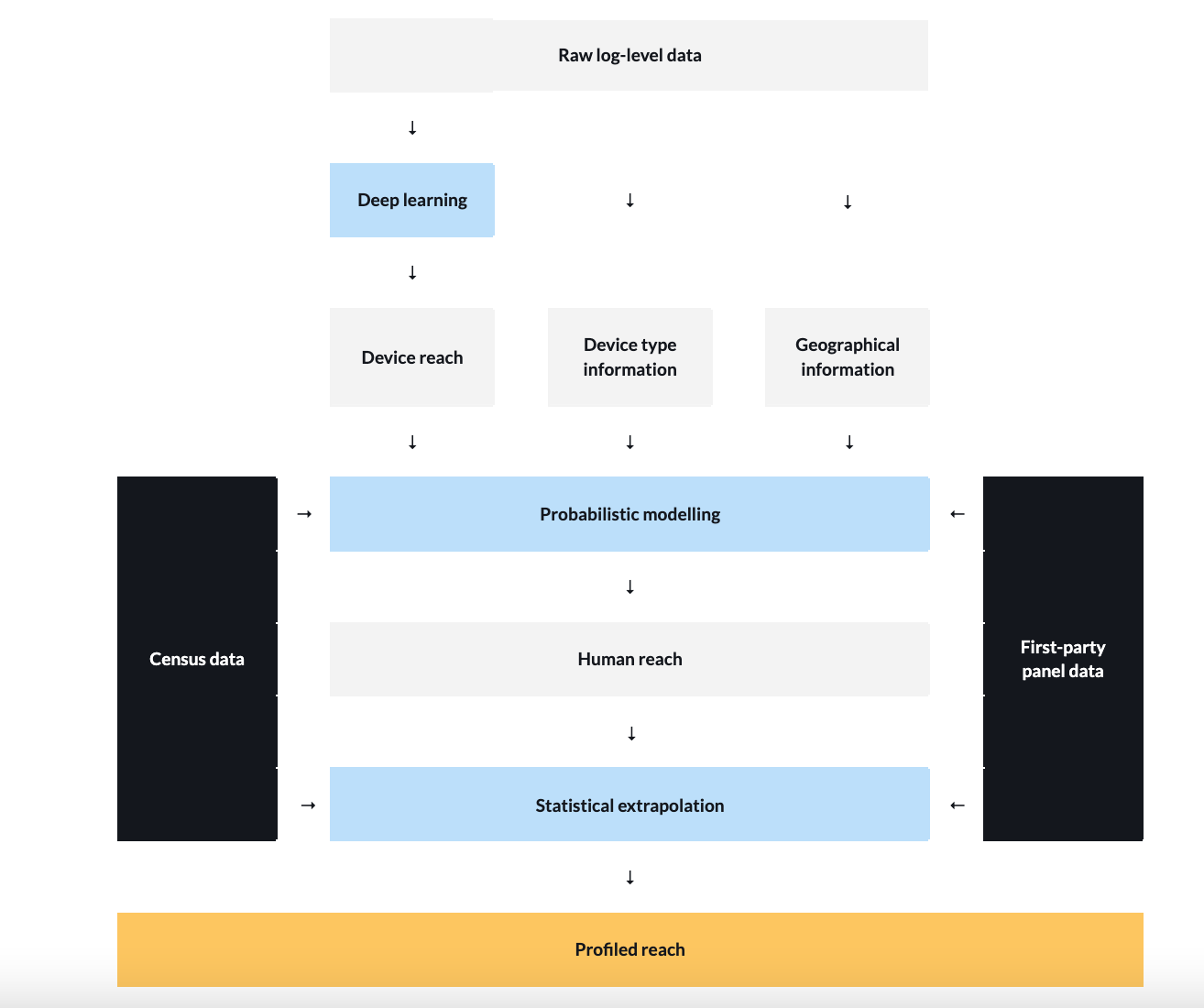

AudienceProject uses advanced technology and robust methodology to measure campaigns running on the open web. By using deep learning and probabilistic modelling in combination with first-party panel data, AudienceProject provides audience reach and frequency insights for open web campaigns in a cookieless and privacy-safe manner.

Today’s media industry is increasingly defined by walled gardens, different ID universes, fragmented media consumption and privacy. This challenges marketers’ ability to measure and analyse campaigns holistically via cookie-based measurement.

AudienceProject overcomes this challenge by using three different enablers; direct clean room integrations, advanced technology and robust methodology.

When measuring campaigns on walled gardens like Meta and YouTube, we utilise our direct clean room integrations with those platforms. However, for open web campaigns, such integrations are not possible, and thus, we use other means to measure these. More specifically, we use deep learning and probabilistic modelling in combination with first-party panel data to provide audience reach and frequency insights for open web campaigns.

How it works

Our general measurement strategy is to use and combine all relevant data for the specific measurement purpose. In the case of open web, this means combining information from loglines with information derived from our measurement panel.

Raw log-level data delivers an abundance of potentially useful information - even when completely stripped of personally identifiable information (PII). Based on raw log-level data such as geographical locations, timestamps, user agent data, etc., we initially use our deep learning model to estimate how many devices are reached by a campaign.

To ensure that we deliver precise device reach estimates, our deep learning model is trained with high-quality and high-volume data from our historical campaign measurements and online behavioural targeting. At the same time, it is constantly validated by taking a critical approach to the outcome of the model, ensuring that it is continuously fine-tuned by incorporating new learnings.

When we have calculated the device reach, we combine this with our knowledge about the“device universe” and geographical information as well as demographic information. derived from our consented and representative measurement panel. This allows us to estimate how many humans are reached by a campaign and thus provides insights into audience reach and frequency. The profiled reach is then modelled based on our consented and representative measurement panel.

As the input of the measurement is aggregated data, the measurement is based on groups, not individuals, ensuring that it is done in a fully privacy-safe manner.

Measurement of audience reach and frequency for open web campaigns:

What is deep learning?

Deep learning is a framework of machine learning methods that utilises flexible mathematical models that contain a very large number of free parameters, enabling modelling of a wide range of phenomena. Systems utilising deep learning have successfully solved many challenges, including image classification, fraud detection, language translation, and even art creation (the latter to a debatable degree of success).

This can sound a bit abstract, but let’s try to simplify it with an example from ‘the real life’.

Imagine you are given the task of telling how many people are visiting a public park on a given day. The only limitation is that you are not allowed to count the number of people. This means that you need to look for other traces that can help you estimate how many people are visiting the park.

For example, you can look for how many to-go coffee cups are in the bins, how many footmarks are on the trails and how many marks are in the grass after picnic blankets. Using these traces will help you get an idea about the number of people visiting the park, and with the presence of historical data, you can even validate and readjust the estimation. Maybe you learn that only half of the park visitors usually bring to-go coffee or that people normally do picnics when the weather allows it.

In the same way as you are looking for traces and using your prior knowledge to estimate the number of people, AudienceProject uses deep learning to estimate the number of devices reached by open web campaigns.

What is probabilistic modelling?

Probabilistic modelling is a type of statistical modelling that incorporates probability distributions to account for uncertainty when drawing conclusions from the data. Combining prior knowledge and stochastic variables, probabilistic modelling can predict the most likely outcome of an indeterministic process. This indeterminism can stem from truly random or unpredictable events or real-world facts that are just unknown in the model.

What is statistical Extrapolation?

In many instances, we do not have access to a direct measurement of the objects we want to know about. Instead, we look at patterns, connections and correlations for similar objects we can observe and extrapolate to the objects we want to know about using the assumption that the patterns we have seen for the objects we extrapolate from are the same as the objects we extrapolate to.

Traditional cookie-based measurement vs new AudienceProject measurement

To validate our measurement methodology, we have compared campaign measurement results from hundreds of campaigns across our markets where our traditional cookie-based measurement methodology and our new measurement methodology based on deep learning and probabilistic modelling have been used.

The graphs below show the reach build-up over time for two of the campaigns where we have made the comparison. The grey line represents the reach build-up for a campaign measured with our traditional cookie-based measurement methodology, whereas the blue line represents the same campaign but measured with our new measurement methodology.

The graphs show that the reach calculations based on our new measurement methodology are very close to those made with our traditional cookie-based measurement methodology.

Reporting and benefits

Reporting

- Metrics: Reach in target group, frequency, hitrate, on-target percentage and events in target group

- Reach building event types: Impressions, viewable impressions, clicks and video quartiles

- Demographics: All demographics (gender, age, income, employment, education, household size, children in household)

- Reporting period: 84 days

Benefits

- Get independent measurement of open web campaigns

- Understand the total, de-duplicated reach generated by campaigns on open web and other channels

- Understand the incremental reach generated by open web campaigns